Graph Embedding Priors for Multi-task Deep Reinforcement Learning

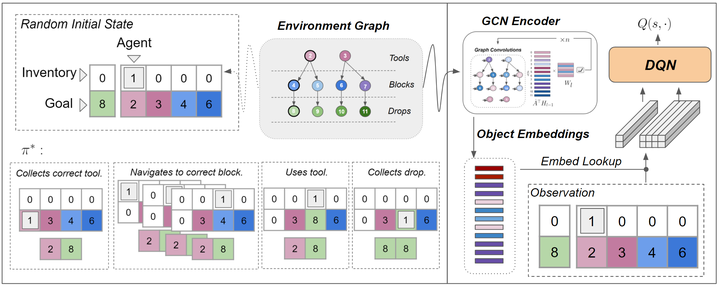

Architecture

Architecture

Abstract

Humans appear to effortlessly generalize knowledge of similar objects and relations when learning new tasks. For example, humans playing Minecraft can learn how to use a tool to mine one block, then rapidly generalize that skill to mine others. We leverage graph-encoded object priors to capture this property and improve the performance of reinforcement learning agents across multiple tasks. We introduce a novel, flexible architecture that utilizes graph convolutional networks (GCNs), which provide a natural method to combine relational information over connected nodes. We evaluate our approach on a procedurally-generated, multi-task environment: Symbolic Procgen. Our experiments demonstrate that the method generalizes across many tasks and scales to domains with hundreds of objects and relations. Additionally, we perform ablation studies that demonstrate robustness to noisy graph priors, suggesting that the method is suitable for leveraging graphs generated from large, unstructured sources of knowledge in real-world settings.