Locally Observable Markov Decision Process

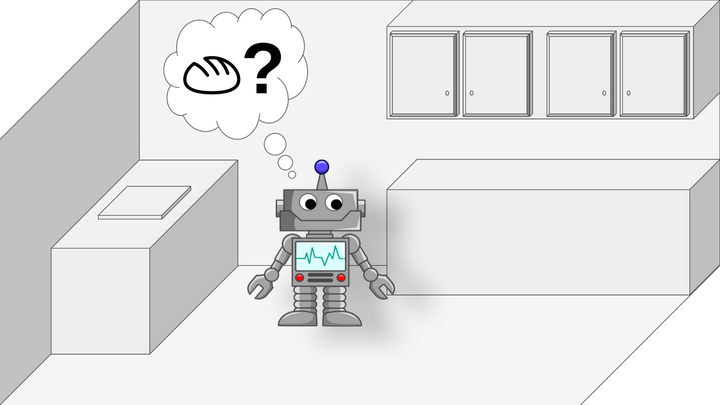

Localities, robots, and PBJs

Localities, robots, and PBJs

Abstract

Real-world robot task planning is computationally intractable in part due to the complexity of dealing with partial observability. One approach to reducing planning complexity is to assume additional model structure such as mixed-observability, factored state representations, or temporally-extended actions. We introduce a novel structured formulation, the Locally Observable Markov Decision Process, which assumes that partial observability stems from limited sensor range—objects outside sensor range are unobserved, but become fully observed once they are within sensor range. Plans solving tasks of this type have a specific structure: they must necessarily go through localities where objects transition from unobserved to fully observed. We introduce a novel planner that reduces planning time via a hierarchy that structures the plan around these localities, and interleaves online and offline planning. We present preliminary results in a challenging domain that shows that the locality assumption enables robots to plan effectively in the presence of this type of uncertainty.